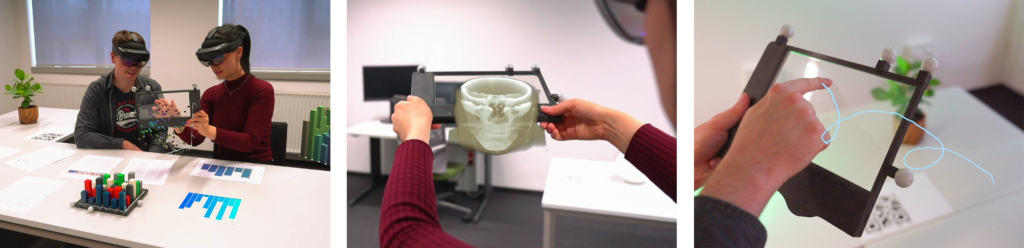

The CleAR Sight research platform allows multiple people to use a touch-enabled, transparent interaction panel and perform tasks such as working with abstract data visualizations, exploring volumetric data sets, and making in-situ annotations.

Abstract

In this paper, we examine the potential of incorporating transparent, handheld devices into head-mounted Augmented Reality (AR). Additional mobile devices have long been successfully used in head-mounted AR, but they obscure the visual context and real world objects during interaction. Transparent tangible displays can address this problem, using either transparent OLED screens or rendering by the head-mounted display itself. However, so far, there is no systematic analysis of the use of such transparent tablets in combination with AR head-mounted displays (HMDs), with respect to their benefits and arising challenges. We address this gap by introducing a research platform based on a touch-enabled, transparent interaction panel, for which we present our custom hardware design and software stack in detail. Furthermore, we developed a series of interaction concepts for this platform and demonstrate them in the context of three use case scenarios: the exploration of 3D volumetric data, collaborative visual data analysis, and the control of smart home appliances. We validate the feasibility of our concepts with interactive prototypes that we used to elicit feedback from HCI experts. As a result, we contribute to a better understanding of how transparent tablets can be integrated into future AR environments.

Research Article

Accompanying Video

Building Instructions & Source Code

Step-by-step building instructions, additional resources and source code are available:

Step-by-Step Building Instructions

Application Source Code

- GitHub Repository: https://github.com/imldresden/clear-sight/

Publications

@inproceedings{fixme,

author = {Katja Krug and Wolfgang B\"{u}schel and Konstantin Klamka and Raimund Dachselt},

title = {CleAR Sight: Exploring the Potential of Interacting with Transparent Tablets in Augmented Reality},

booktitle = {Proceedings of the 21st IEEE International Symposium on Mixed and Augmented Reality},

series = {ISMAR '22},

year = {2022},

month = {10},

isbn = {978-1-6654-5325-7/22},

location = {Singapore},

pages = {196--205},

doi = {10.1109/ISMAR55827.2022.00034},

publisher = {IEEE}

}Weitere Materialien

@inproceedings{fixme,

author = {Wolfgang B\"{u}schel and Katja Krug and Konstantin Klamka and Raimund Dachselt},

title = {Demonstrating CleAR Sight: Transparent Interaction Panels for Augmented Reality},

booktitle = {Extended Abstracts of the 2023 CHI Conference on Human Factors in Computing Systems},

year = {2023},

month = {2},

isbn = {978-1-4503-9422-2/23/04},

location = {Hamburg, Germany},

pages = {1--5},

numpages = {5},

doi = {10.1145/3544549.3583891},

publisher = {ACM}

}

Related Publications

@inproceedings{fixme,

author = {Weizhou Luo and Eva Goebel and Patrick Reipschl\"{a}ger and Mats Ole Ellenberg and Raimund Dachselt},

title = {Demonstrating Spatial Exploration and Slicing of Volumetric Medical Data in Augmented Reality with Handheld Devices},

booktitle = {2021 IEEE International Symposium on Mixed and Augmented Reality},

year = {2021},

month = {10},

location = {Bari, Italy}

}Weitere Materialien

@inproceedings{luo2021exploring,

author = {Weizhou Luo and Eva Goebel and Patrick Reipschl\"{a}ger and Mats Ole Ellenberg and Raimund Dachselt},

title = {Exploring and Slicing Volumetric Medical Data in Augmented Reality Using a Spatially-Aware Mobile Device},

booktitle = {2021 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct)},

year = {2021},

month = {10},

location = {Bari, Italy},

doi = {10.1109/ISMAR-Adjunct54149.2021.00076},

publisher = {IEEE}

}@inproceedings{DIS-2014-tPad,

author = {Juan David Hincapi\'{e}-Ramos and Sophie Roscher and Wolfgang B\"{u}schel and Ulrike Kister and Raimund Dachselt and Pourang Irani},

title = {tPad: Designing Transparent-Display Mobile Interactions},

booktitle = {Proceedings of the 10th ACM Conference on Designing Interactive Systems},

year = {2014},

month = {6},

location = {Vancouver, BC, Canada},

pages = {161--170},

numpages = {10},

doi = {10.1145/2598510.2598578},

url = {https://doi.org/10.1145/2598510.2598578},

publisher = {ACM},

keywords = {Transparent Displays, Transparent Mobile Devices, tPad, Flipping, Tap’n Flip, Surface Capture, Contact AR}

}Weitere Materialien

@inproceedings{PerDis-2014-cAR,

author = {Juan David Hincapi\'{e}-Ramos and Sophie Roscher and Wolfgang B\"{u}schel and Ulrike Kister and Raimund Dachselt and Pourang Irani},

title = {cAR: Contact Augmented Reality with Transparent-Display Mobile Devices},

booktitle = {Proceedings of the 3rd International Symposium on Pervasive Displays},

year = {2014},

month = {6},

location = {Copenhagen, Denmark},

pages = {80--85},

numpages = {6},

doi = {10.1145/2611009.2611014},

url = {https://doi.org/10.1145/2611009.2611014},

keywords = {Contact Augmented Reality, Transparent Devices}

}Weitere Materialien

@article{Büschel-2013-CHI,

author = {Wolfgang B\"{u}schel and Andr\'{e} Viergutz and Raimund Dachselt},

title = {Towards Interaction with Transparent and Flexible Displays},

booktitle = {CHI 2013 Workshop on Displays Take New Shape: An Agenda for Future Interactive Surfaces},

year = {2013},

month = {4},

numpages = {4},

keywords = {mobile devices, transparent and flexible interfaces, interaction techniques}

}Weitere Materialien

Related Student Theses

Combining Augmented Reality and Mobile Devices for the Immersive Exploration of Medical Data Visualizations

Tino Helmig 3. Juli 2020 bis 2. März 2021

Betreuung: Eva Goebel, Weizhou Luo, Patrick Reipschläger, Raimund Dachselt

Unterstützung von Augmented-Reality-Datenanalyse mittels 3D-registrierter Eingabe auf einer transparenten Oberfläche

Katja Krug 5. Februar 2021 bis 9. Juli 2021

Betreuung: Wolfgang Büschel, Raimund Dachselt

Acknowledgments

This work was funded by the Deutsche Forschungsgemeinschaft (DFG) under Germany’s Excellence Strategy – EXC 2050/1 – 390696704 – Cluster of Excellence “Centre for Tactile Internet with Human-in-the-Loop” (CeTI) and EXC 2068 – 390729961 – Cluster of Excellence ”Physics of Life”, as well as DFG grant 389792660 as part of TRR 248 – CPEC (see https://perspicuous-computing.science). We also acknowledge the funding by the Federal Ministry of Education and Research of Germany in the program of “Souverän. Digital. Vernetzt.”, joint project 6G-life, project ID 16KISK001K and by Sächsische AufbauBank (SAB), project ID 100400076 “Augmented Reality and Artificial Intelligence supported Laparoscopic Imagery in Surgery” (ARAILIS) as TG 70.