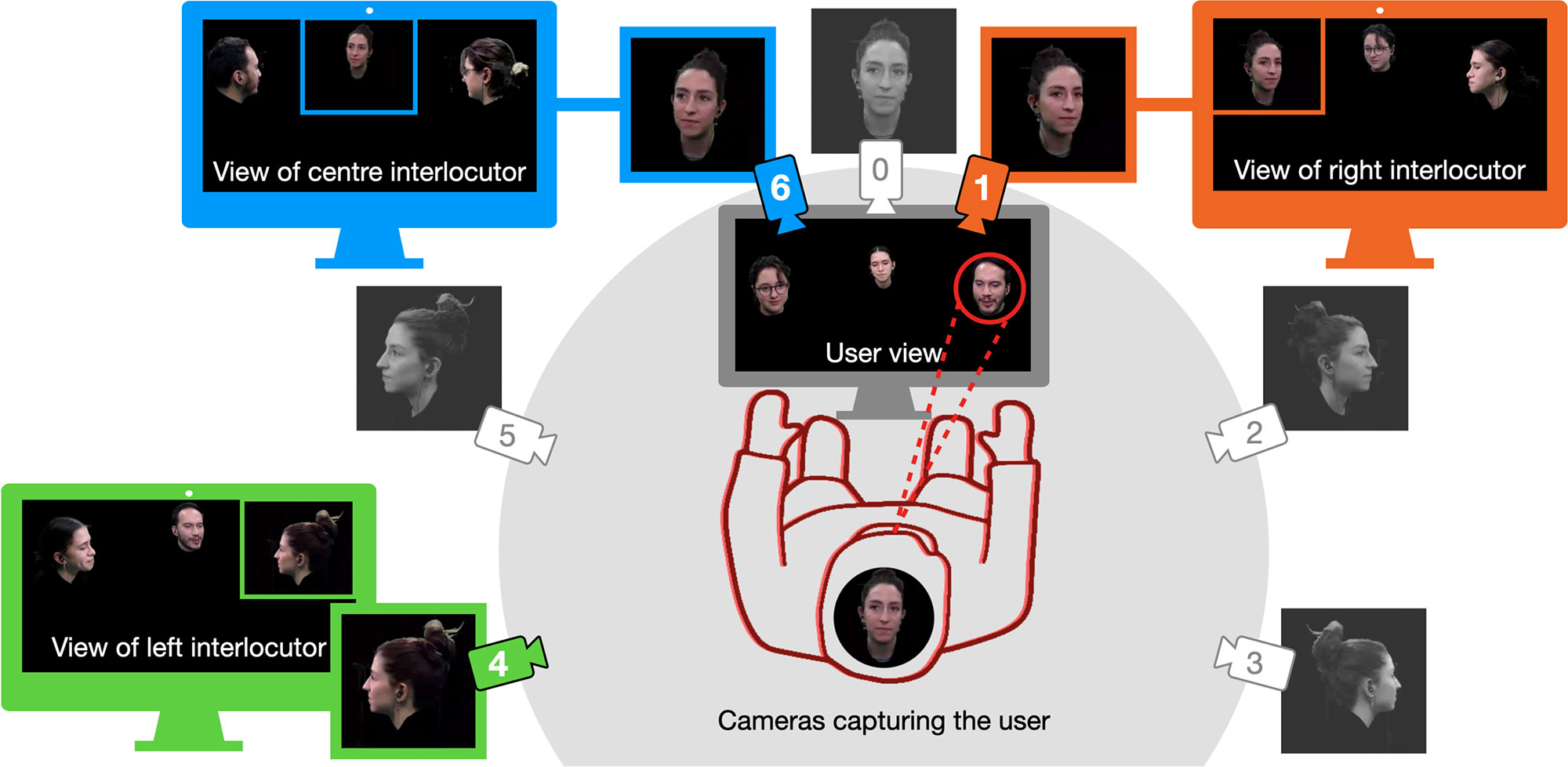

Gazing Heads is a round-table virtual meeting concept that uses only a single screen per participant. It enables direct eye contact, and signals gaze via controlled head rotation.

Abstract

Videoconferencing has become a ubiquitous medium for collaborative work. It does suffer however from various drawbacks such as zoom fatigue. This paper addresses the quality of user experience by exploring an enhanced system concept with the capability of conveying gaze and attention. Gazing Heads is a round-table virtual meeting concept that uses only a single screen per participant. It enables direct eye contact, and signals gaze via controlled head rotation. The technology to realise this novel concept is not quite mature though, so we built a camera-based simulation for four simultaneous videoconference users. We conducted a user study comparing Gazing Heads with a conventional “Tiled View” video conferencing system, for 20 groups of 4 people, on each of two tasks. The study found that head rotation clearly conveys gaze and strongly enhances the perception of attention. Measurements of turn-taking behaviour did not differ decisively between the two systems (though there were significant differences between the two tasks). A novel insight in comparison to prior studies is that there was a significant increase in mutual eye contact with Gazing Heads, and that users clearly felt more engaged, encouraged to participate and more socially present. Overall, participants expressed a clear preference for Gazing Heads. These results suggest that fully implementing the Gazing Heads concept, using modern computer vision technology as it matures, could significantly enhance the experience of videoconferencing.

We found that with Gazing Heads it is effortlessly apparent who is looking at whom. Gazing Heads proved beneficial for social presence and user engagement

Media

Presentation @ ACM CHI’25

The work was published in the Journal ACM Transactions on Computer-Human Interaction. We will present our work at the ACM CHI conference on April 29.

To be presented at ACM CHI’25. Watch the presentation on YouTube.

Publication

@article{Schuessler-2024-GazingHeads,

author = {Martin Schuessler and Luca Hormann and Raimund Dachselt and Andrew Blake and Carsten Rother},

title = {Gazing Heads: Investigating Gaze Perception in Video-Mediated Communication},

journal = {ACM Transactions on Computer-Human Interaction (TOCHI)},

volume = {31},

issue = {3},

year = {2024},

month = {6},

doi = {10.1145/3660343},

url = {https://doi.org/10.1145/3660343},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA}

}List of additional material

Acknowledgments

We thank Radek Mackowiak and Titus Leistner for their technical support in setting up our experimental setup, Tianling Yang for her help with the initial literature review and Ihtisham Ahmad for implementing parts of the eye-tracking analysis and generating Figure 5 of the article. The authors are grateful for the feedback of the anonymous reviewers that was very helpful to improve this article.

This work was co-funded by the Federal Ministry of Education and Research of Germany in the programs “Weizenbaum Institute for the Networked Society – The German Internet Institute” (project ID: 16DII113), “Souverän. Digital. Vernetzt.”, joint project 6G-life, (project ID 16KISK001K) and “Berlin Center for Machine Learning”.

We also acknowledge financial support from from the ERC Consolidator Grant RSM (647769) and by the Deutsche Forschungsgemeinschaft (DFG) under Germany’s Excellence Strategy – EXC 2050/1 – 390696704 – Cluster of Excellence “Centre for Tactile Internet with Human-in-the-Loop” (CeTI) and DFG grant 389792660 as part of TRR 248 – CPEC (see https://perspicuous-computing.science).