A new generation of immersive, in-the-wild Information Retrieval systems

Abstract

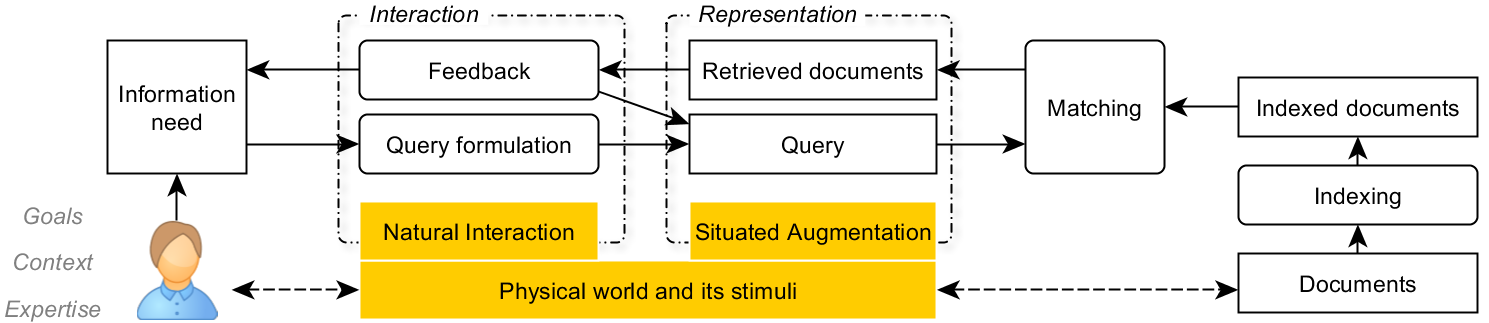

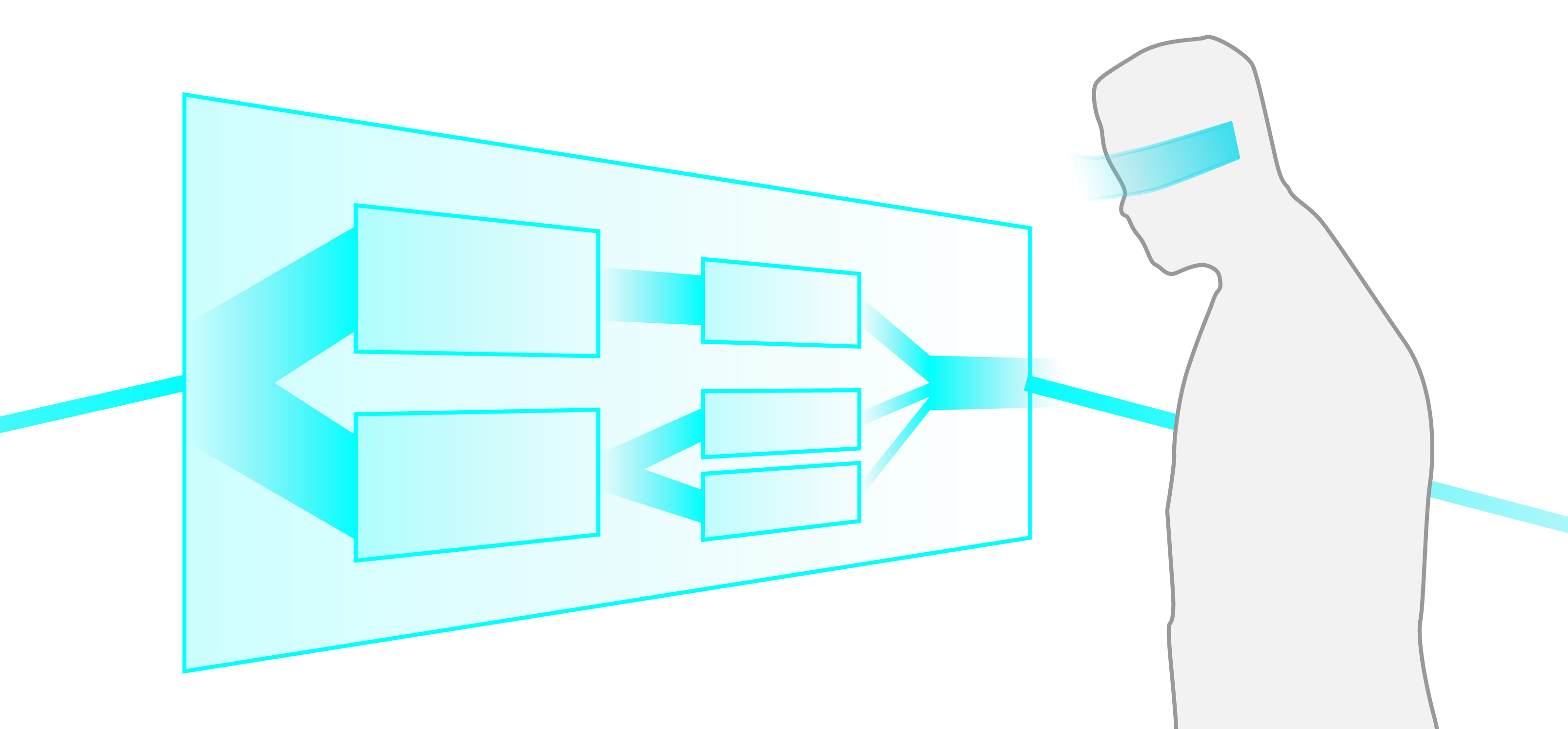

Today, the widespread use of mobile devices allows users to search information „on the go“, whenever and wherever they want, no longer confining Information Retrieval to classic desktop interfaces. We believe that technical advances in Augmented Reality will allow Information Retrieval to go even further, making use of both the users’ surroundings and their abilities to interact with the physical world. We present the fundamental concept of Reality-Based Information Retrieval, which combines the classic Information Retrieval process with Augmented Reality technologies to provide context-dependent search cues and situated visualizations of the query and the results. With information needs often stemming from real-world experiences, this novel combination has the potential to better support both Just-in-time Information Retrieval and serendipity. Based on extensive literature research, we propose a conceptual framework for Reality-Based Information Retrieval.

Paper @ ACM CHIIR ’18

Info The paper presenting our conceptual framework of Reality-based Information Retrieval has won the best paper award at the ACM SIGIR Conference on Human Information Interaction and Retrieval (CHIIR) 2018 which took place March 11-15, 2018 in New Brunswick, New Jersey, USA.

Envisioned Scenarios

We illustrate the basic concept of Reality-based Information Retrieval with some envisioning scenarios:

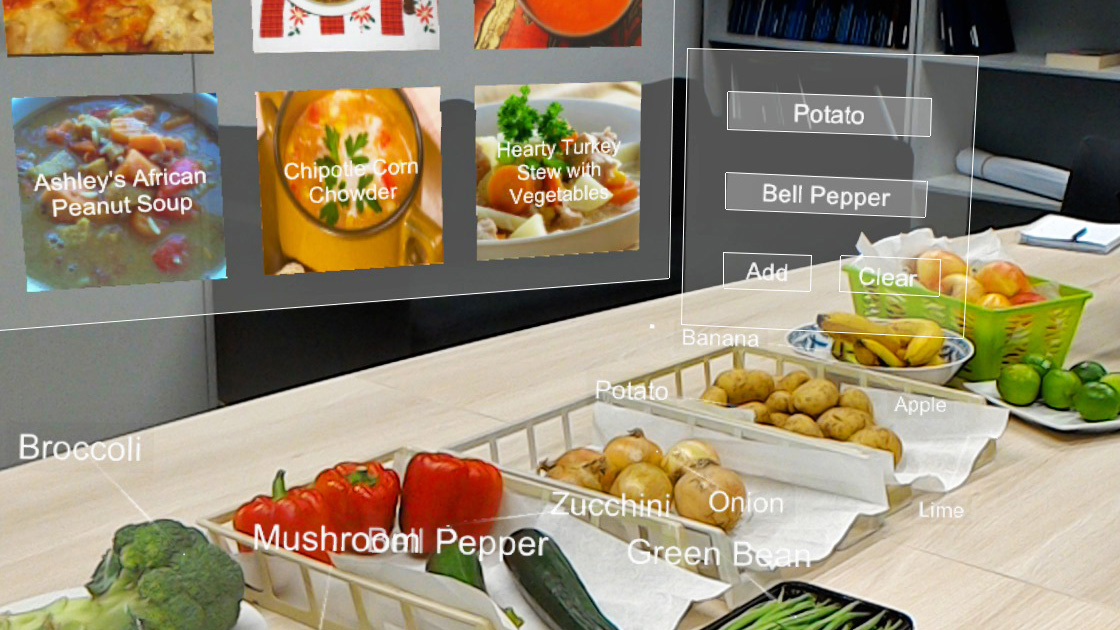

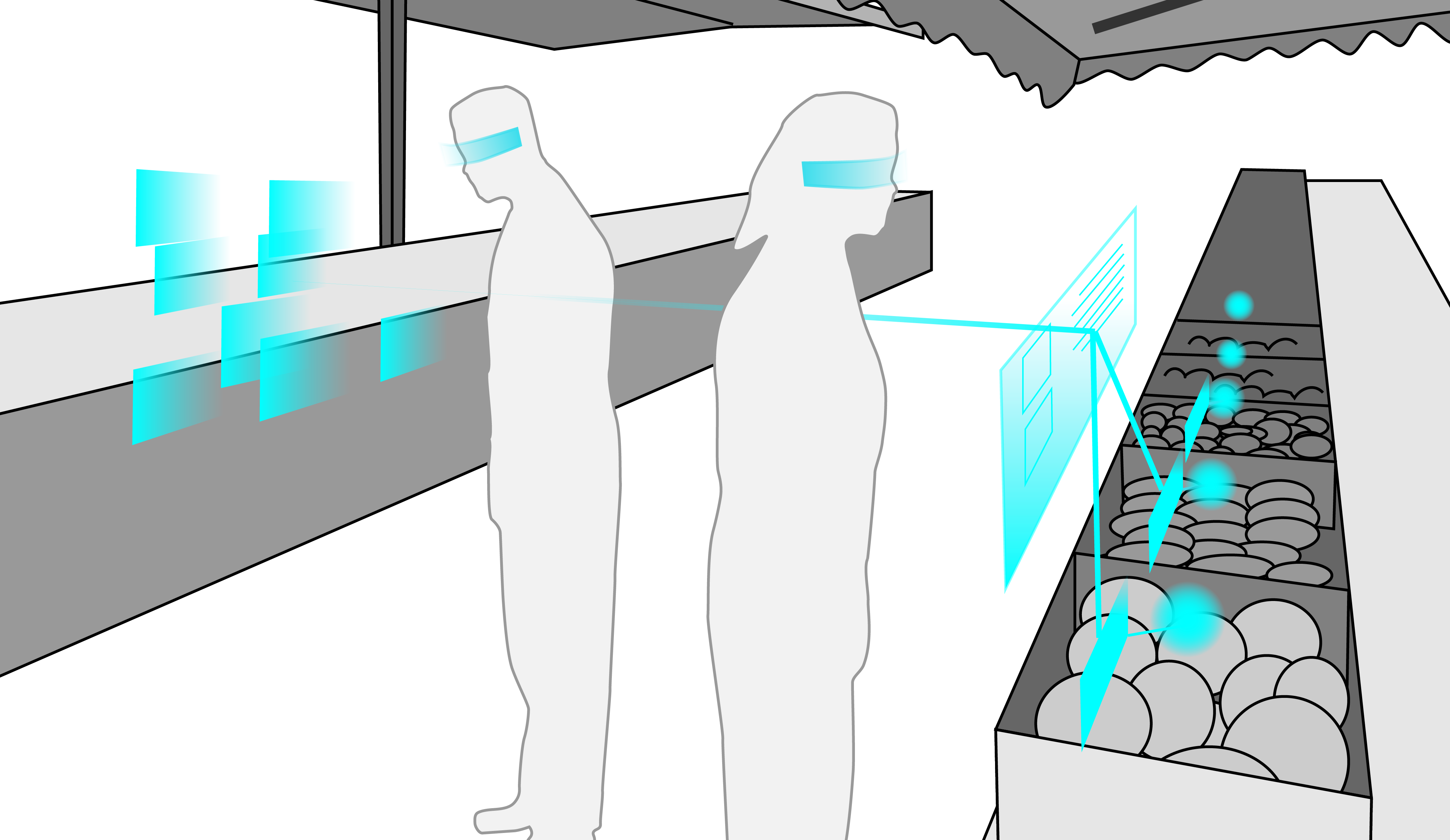

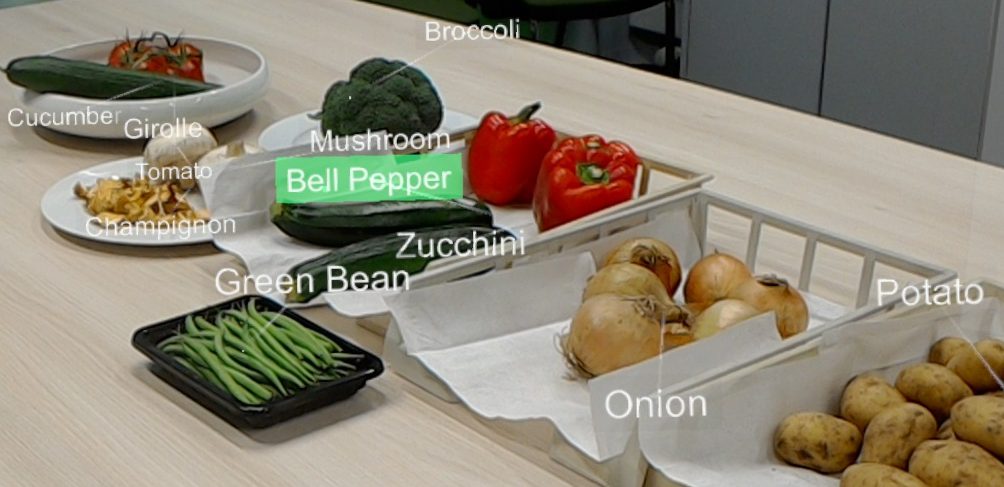

Recipes search: Alice and Bob are at the market place to buy groceries. As they look at the different fruits and vegetables, small indicators light up, showing that the system has additional information ready for them. Recipes appear scattered in front of them, showing the most relevant results closer to them. Literature search: Alex draws a book from a bookshelf of his housemate and searches for related publications of the author. The RBIR application identifies the book and its metadata. The writing on the book cover is augmented by virtual controls as overlays which Alex physically touches to start a search. Results are presented in the bookshelf in front of him.

Image retrieval: Anne searches for pictures to decorate her living room by virtually placing relevant results from an image database on her wall. The RBIR application extracts visual features from the surrounding for content-based image retrieval to filter the result set of relevant images, e. g., regarding dominant colors.Video retrieval: Currently watching a soccer game, Phil searches for soccer videos with scenes showing similar positions of the players for comparison. The RBIR application analyses the relative positions and motion vectors of the identified players on the field and suggests similar recordings.

A Conceptual Framework for Reality-Based Information Retrieval

The Physical World

Embedding the IR process into the physical world is one of the key aspects of Reality-Based Information Retrieval. Sensory input from the real world, mainly visual and auditory stimuli, are one of the main triggers for information needs.

Physical World Stimuli

We differentiate several classes of physical world stimuli which show a progression from physical to human-defined properties:

- Low-level features that can be directly extracted from the input stream.

- Mid-level features that are usually based on classification or pattern detection processes.

- High-level concepts that include identities derived from low- and mid-level features and background knowledge.

- Associated data and services that are either provided by a (smart) object itself or are externally hosted and logically connected to an object.

Contextual Cues

A deep contextual knowledge can facilitate sensemaking and is also the gate to attached data and services, making for a more powerful system than achievable by context free data only. However, information about the context may be missing, limited, or misinterpreted. We differentiate between

- context free cues which are usually low-level features (e. g., color),

- cues with a weak context e. g., knowledge about general settings such as “office” helps to identify object classes, and

- cues with strong context including, e. g., the exact location, beacons, or QR codes.

Output

The physical world is not only the source for information needs and query input. By appropriation of existing displays or embedded physicalizations [Willett et al. 2017], i. e., physical objects used for in-situ data representation, the physical world can also be used to visualize queries or result sets, or even give acoustic feedback.

Situated Augmentation for Reality-based Information Retrieval

The registration of content in 3D forms the connection between the physical and virtual world. Aligning virtual objects with related physical objects (locally) or the physical world (globally) makes them intuitively understandable and easier to interpret. Within our framework for RBIR we propose three concepts for Situated Augmentation as described in the following.

Situated Stimulus

We define a Situated Stimulus as the representation of real-world object properties (see above) as input for a search query. Ideally, but not necessarily, they would correspond to the psychological stimuli from the physical world that trigger an information need. It is important that the user mentally associates them to the objects or environments that they belong to. Such a connection can be visually supported by placing the labels near the corresponding object or linking them with lines. Additional visual variables can be used to encode, e. g., the type of the underlying property.

Situated Query Representation

The visualization of the queries depends on their complexity and the requirements of the use case. For complex queries advanced 2D virtual user interfaces [Ens et al. 2014] showing the selected terms and their relation can be used to provide an overview of the request and also allow for editing it.

Situated Result Representation

The representation of search results plays a very important role in a RBIR application. AR provides a number of possibilities determined by

- the intrinsic order/structure of the result set(s), e. g., ordered by relevance, hierarchy, certain properties like timestamp, etc.,

- the reference to the real-world, e. g., placing visualizations relative to the user or relative to the environment, and

- the mapping of the result space, e. g., using metaphors or local coordinate systems.

Natural Interaction for Reality-based Information Retrieval

Based on the elementary interaction tasks in the Information Retrieval process, we distinguish four Natural Interaction tasks within RBIR as described in the following.

Natural Query Specification

A query can be submitted to an IR system in the form of

- text, like keywords, tags, natural language, artificial query language, commands, etc.

- key-value pairs, e. g., for property-based or faceted search,

- examples, in case of Query-by-Example / similarity-based search, or

- via associations, e. g., in browsing scenarios.

Natural Result Exploration and Interaction

Depending on the concrete reference and mapping of the result representation, we envision spatial interaction techniques or gaze-supported multimodal interaction for natural result exploration. Locally or globally registered information spaces can be explored by physical movement of the user, e. g., determining the level of detail by the distance and the type of information by the angle to the object.

Natural User Feedback

Interacting with a result set is of course an implicit form of giving relevance feedback. Additionally, we imagine to rearrange results as a form of relevance feedback using motion and gestures or additional devices. We propose the idea of metaphorical relevance feedback using or establishing relations between results and the physical world.

Natural Annotation

Beyond the rather implicit user feedback described above, we also envision a natural way for the user to annotate physical stimuli, situated stimuli as well as retrieved results, resp. the connection between stimuli and results. User annotations left in AR may inspire other users or help them discover new aspects or connections.

Publications

@inproceedings{Bueschel2018a,

author = {Wolfgang B\"{u}schel and Annett Mitschick and Raimund Dachselt},

title = {Demonstrating Reality-Based Information Retrieval},

booktitle = {Proceedings of the 2018 CHI Conference Extended Abstracts on Human Factors in Computing Systems},

series = {CHI EA '18},

year = {2018},

month = {4},

isbn = {978-1-4503-5621-3},

location = {Montreal, QC, Canada},

pages = {D312:1--D312:4},

numpages = {4},

doi = {10.1145/3170427.3186493},

url = {https://doi.org/10.1145/3170427.3186493},

acmid = {3186493},

publisher = {ACM},

address = {New York, NY, USA},

keywords = {augmented reality, immersive visualization, in situ visual analytics, reality-based information retrieval, spatial user interface}

}Weitere Materialien

, Video

@inproceedings{Bueschel:2018,

author = {Wolfgang B\"{u}schel and Annett Mitschick and Raimund Dachselt},

title = {Here and Now: Reality-based Information Retrieval},

booktitle = {Proceedings of the ACM SIGIR Conference on Human Information Interaction and Retrieval},

year = {2018},

month = {3},

location = {New Brunswick, NJ, USA},

pages = {171--180},

numpages = {10},

doi = {10.1145/3176349.3176384},

url = {https://doi.org/10.1145/3176349.3176384},

publisher = {ACM}

}Weitere Materialien

, Video

Related Student Theses

Image Retrieval in der erweiterten Realität

Philip Manja 25. November 2016 bis 5. Mai 2017

Betreuung: Annett Mitschick, Wolfgang Büschel, Raimund Dachselt